The Best AI Large Language Models of 2025: Why the Winning Strategy Is a Stack, Not a Single Model

In 2025, the smartest AI users stopped obsessing over “the best” large language model and started thinking like engineers. Instead of waiting for one perfect system that could do everything, they built stacks: combinations of models, each chosen for a particular strength—coding, volume throughput, creativity, safety, or cost.

The shift was subtle but decisive. Models stopped being treated as quirky personalities to chat with and started being evaluated as tools in a toolkit. The people and teams who got the most out of AI weren’t the ones who found a magic model; they were the ones who assembled the right mix.

Below is the kind of stack that defined 2025—and the models that genuinely earned a spot in it.

—

The 2025 Playbook: Build a Stack, Not a Monolith

The defining strategy of 2025 was abandoning the hunt for a single “best” LLM. Instead, advanced users organized their workflows around:

– A premium, high‑accuracy model for critical tasks

– A low‑cost but capable model for bulk work

– A specialized model for fiction and long‑form creativity

– A constrained or alignment‑tuned model for sensitive scenarios

– Optional add‑ons for vision, codebase‑wide reasoning, and autonomous agents

This approach mirrored how professionals already treat software. Nobody expects one app to handle spreadsheets, video editing, and 3D rendering. Likewise, expecting one LLM to be unbeatable at coding, storytelling, research, and compliance turned out to be a losing game.

—

Claude: The High‑End Workhorse for Coding and Editing

At the top of many 2025 stacks sat a premium model like Claude, used for:

– High‑stakes code generation and refactoring

– Deep code reviews with reasoning about architecture and trade‑offs

– Precision editing of technical documents, contracts, and reports

– Complex reasoning over long inputs, such as entire repositories or policy documents

What made models in this tier stand out was not just raw intelligence, but reliability. They excelled at:

– Following nuanced instructions

– Maintaining style and tone across long documents

– Handling subtle logic and edge cases in code

For teams, this “premium slot” was where quality mattered more than price. One accurate, context‑aware answer could easily be worth thousands of cheap but messy responses.

—

DeepSeek or Qwen: The Volume Engines

For heavy workloads—data cleaning, bulk content drafting, mass classification, or exploratory coding—price and throughput became crucial. That’s where more affordable models like DeepSeek or Qwen carved out their niche.

Typical use cases included:

– Generating large numbers of product descriptions, emails, or summaries

– Running wide parameter sweeps for prompts and ideas

– Rapidly exploring multiple design or code alternatives

– Handling back‑office automation where perfect style wasn’t required

They weren’t always the most polished or subtle, but for many tasks, “good enough at scale” beat “perfect but expensive.” The winning move was to:

1. Use cheaper models for first drafts and bulk work

2. Pipe the best outputs into a premium model (like Claude) for refinement

This two‑step pattern became standard in marketing, software development, and research teams alike.

—

Muse: Fiction, Narrative, and Long‑Form Creativity

While general LLMs can write, dedicated creative models like Muse emerged as the go‑to choice for storytelling and narrative‑heavy tasks. These models were optimized for:

– Cohesive multi‑chapter fiction and serial storytelling

– Character consistency across long arcs

– World‑building, lore generation, and dialogue

– Screenplays, comics, games, and transmedia projects

Where typical models sometimes drifted, contradicted themselves, or lost the emotional thread, creative‑first models focused on:

– Maintaining continuity over long contexts

– Preserving tone and voice

– Handling complex interpersonal dynamics and plotlines

Writers and creative studios increasingly used Muse‑style models as collaborative partners: not to replace human authors, but to generate outlines, alternative scenes, side characters, and world details at scale.

—

Dolphin: When Control and Constraints Matter

On the opposite end of the spectrum sat models like Dolphin, valued less for raw creativity and more for how tightly they could be controlled.

These models were favored when:

– Compliance, safety, or policy adherence were crucial

– Outputs had to stick closely to templates or regulatory requirements

– You needed deterministic, reproducible behaviors

– You were working with sensitive internal data and strict guardrails

In 2025, the question stopped being “Which model is smartest?” and became “For which tasks can we not afford surprises?” Dolphin‑type models earned their place where predictability, constraint, and alignment took priority over flair.

—

From Chat Partners to Power Tools

Perhaps the biggest cultural shift of 2025 was psychological. Models were no longer treated like chatty companions; they became infrastructure.

This reframing changed how advanced users behaved:

– Prompts became specifications, not conversations.

– Evaluation was based on latency, accuracy, cost, and controllability—not vibes.

– People compared models like they compared CPUs, GPUs, or cloud services.

The result: far less time arguing about which model felt “smarter,” and far more time designing workflows, pipelines, and agent systems that combined models in logical ways.

—

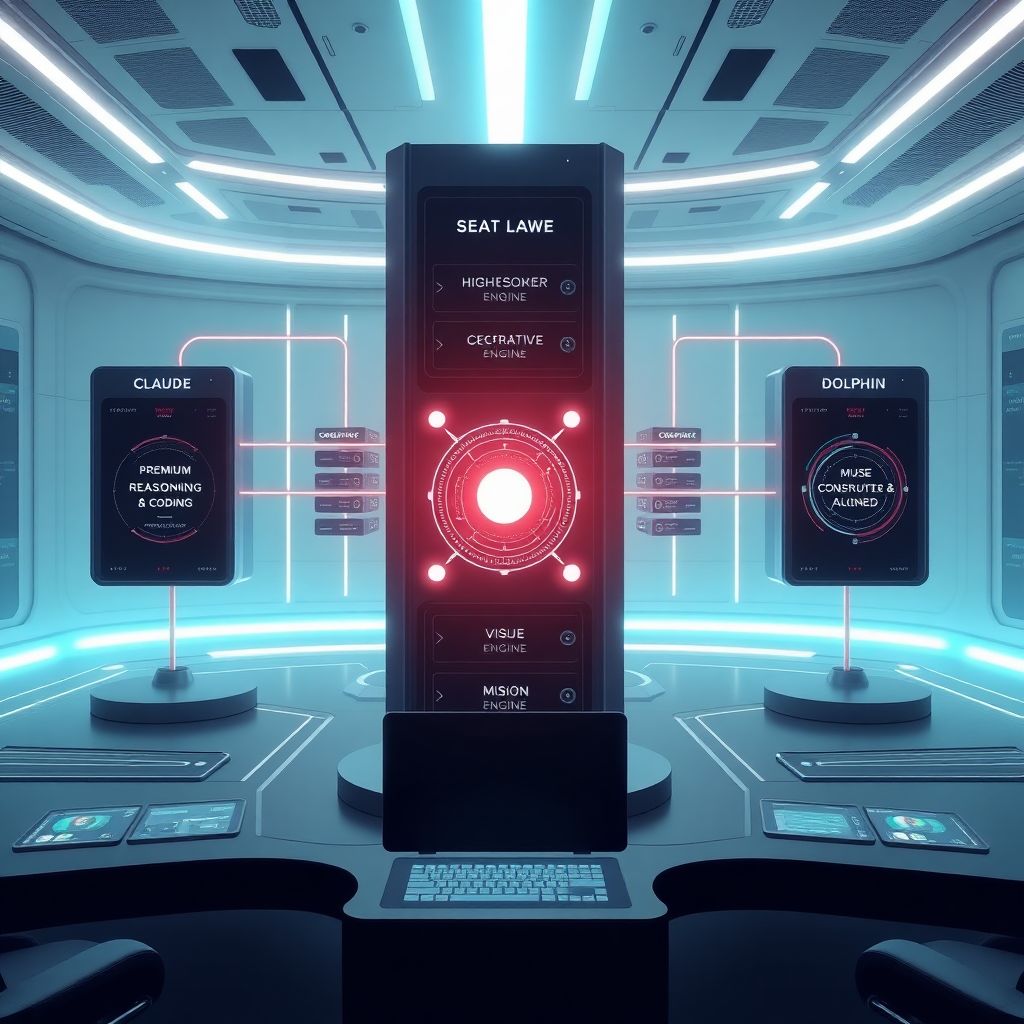

How People Actually Built Their 2025 AI Stacks

In practice, a typical professional or team stack in 2025 looked something like this:

1. Premium reasoning and coding model

For solving complex problems, serious code work, and mission‑critical documents.

2. Low‑cost general model

For brainstorming, bulk drafting, and quick experiments.

3. Creative specialist

For fiction, storytelling, branding concepts, and narrative‑driven campaigns.

4. Tightly aligned / constrained model

For regulated industries, HR tasks, legal‑adjacent content, or anything where you couldn’t risk a rogue output.

5. Vision‑capable model (optional but common)

For reading screenshots, diagrams, UI mockups, and even entire codebases as rendered files.

6. Agentic layer on top

Scripts or tools that chained calls to multiple models, using each where it was strongest.

The sophistication wasn’t in any one model; it was in how they were orchestrated.

—

Vision Models: From Images to Entire Codebases

2025 also saw a quiet revolution on the vision side. Models that once merely “described images” evolved into systems that could:

– Parse complex dashboards and design mocks

– Understand diagrams, schematics, and charts alongside text

– Read and reason about entire codebases by navigating screens, IDEs, or repository views

Developers discovered they could point a vision‑enabled model at a screenshot of an error log, a UI layout, or even a whiteboard photo—and get actionable insights. Product teams used them to:

– Analyze UX flows from screenshots

– Compare design variants

– Detect layout issues or inconsistent patterns

For engineering organizations, vision models bridged the gap between textual reasoning and visual reality, blurring the old boundary between “code” and “interface.”

—

Agentic Tasks: Models That Act, Not Just Answer

Another big trend of 2025 was the rise of agentic workflows—LLM‑powered systems that could take multi‑step actions, not just spit out single responses.

Instead of asking a model, “How do I refactor this code?” people wired systems that:

1. Analyzed a repository with a premium coding model

2. Generated a refactor plan

3. Used a cheaper model for boilerplate modifications

4. Called tools (like git or CI pipelines) to run tests

5. Used a constrained model to audit changes for policy and safety

Similar patterns appeared in research, sales, and operations. The LLM wasn’t the entire product; it was the brain inside an automated workflow.

—

How to Choose Models for Your Own Stack in 2025

By 2025, picking models resembled building a tech stack. The most effective teams used a few clear criteria:

– Task type

Coding, creative writing, data analysis, legal drafting, customer support, etc.

– Risk tolerance

Can you tolerate occasional hallucinations or style drift, or do you need rock‑solid accuracy?

– Volume and budget

Do you need thousands of outputs per day, or just a handful of critical ones?

– Data sensitivity

Are you working with public, semi‑sensitive, or highly confidential information?

– Control needs

Do you need to enforce strict policies, formatting, and behavior?

Once you know those answers, the model selection almost makes itself: premium for critical work, cheaper for scale, creative where narrative matters, constrained where control matters.

—

What This Means for the “Best Model” Question

The clear lesson from 2025 is that there is no single winner. Asking “What is the best LLM?” increasingly misses the point.

More meaningful questions are:

– “What is the best model for this specific job?”

– “Which combination of models gives me the best result per dollar?”

– “Where do I need control, and where can I trade precision for speed and cost?”

In that sense, the “best” model of 2025 was not a single system at all—but the intelligently assembled stack in the hands of a user who knew how to treat AI as infrastructure rather than a novelty.

Those users, and those stacks, are the ones that defined the year.