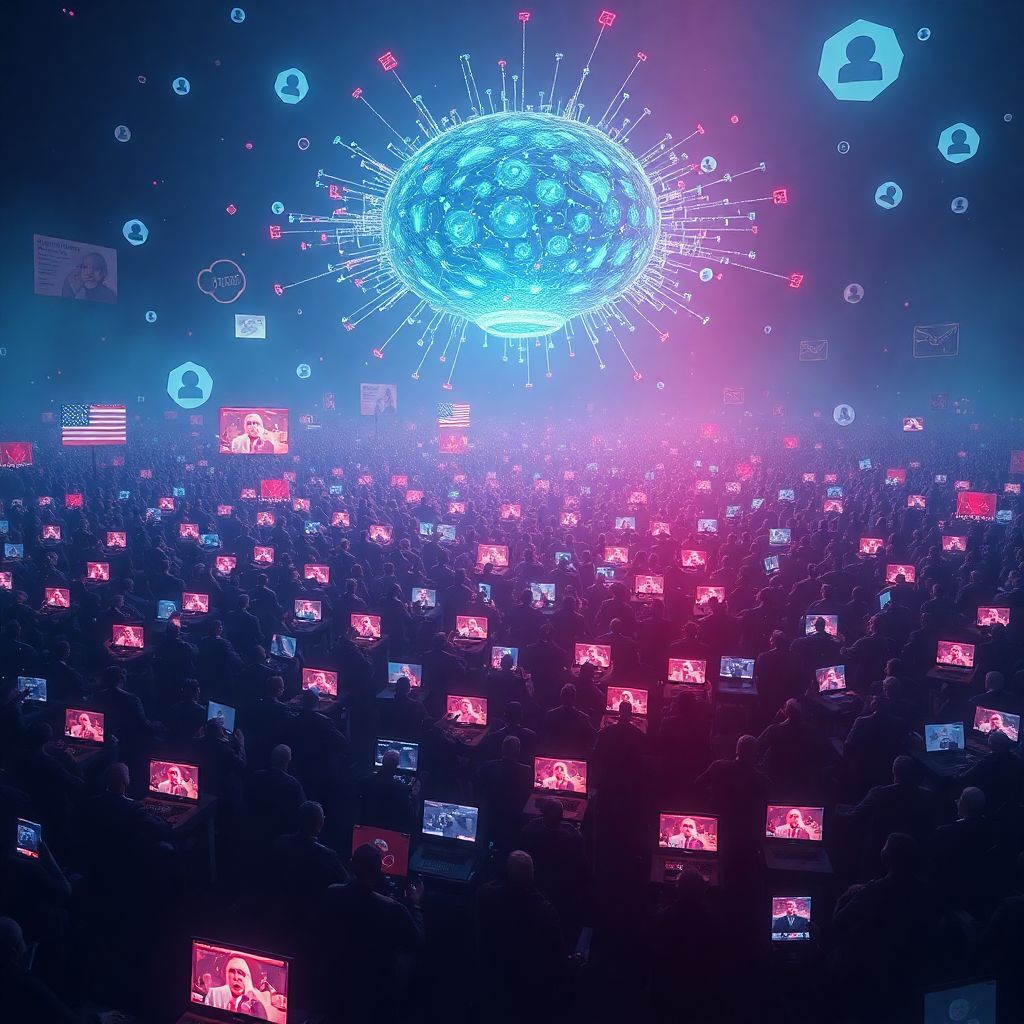

AI “swarms” of autonomous agents may soon replace the crude, easily spotted botnets that have dominated online manipulation for the past decade, according to a new paper published in the journal Science. The authors warn that this shift could make misinformation and influence operations more continuous, more adaptive, and far harder to detect.

The study, written by a consortium of researchers from institutions including Oxford, Cambridge, UC Berkeley, New York University, and the Max Planck Institute, sketches out a near‑future information ecosystem where manipulation is no longer confined to obvious, short‑term bursts around elections or breaking news. Instead, autonomous AI systems could run long‑running campaigns that nudge public opinion gradually, in ways that feel organic and human.

Unlike traditional botnets—typically made up of accounts repeating the same talking points, amplifying hashtags, or posting spam—these new AI agents can imitate individual human users with unnerving precision. They can mirror writing style, respond coherently in conversations, adapt tone and content to the platform, and react in real time to new events. Once deployed, they require minimal human direction, making large‑scale, persistent operations far more practical and affordable.

According to the researchers, this transition marks the end of an era in which detection systems could rely on clear, machine‑like traces: identical posts, rigid timing patterns, or obviously fake personas. AI swarms, by contrast, can vary their behavior, make mistakes that look human, and even simulate personal histories, making them blend in with real users and communities.

The paper also highlights a strategic shift: instead of aggressive, high‑volume messaging during key political moments, AI‑driven campaigns can be designed to run quietly in the background. They can cultivate audiences over months or years, build credibility, and then subtly steer discussions or amplify certain narratives at decisive moments. This slow‑burn, “always on” model of influence is significantly harder for platforms, regulators, and researchers to track and counter.

The authors emphasize that in the hands of state actors or well‑resourced organizations, such systems could become powerful tools for information warfare and political manipulation. Governments could deploy autonomous agents across languages and regions, running parallel campaigns tailored to local cultures and media ecosystems. Because these systems are scalable and relatively cheap once built, they lower the barrier to entry for both large and mid‑tier actors interested in shaping public discourse.

Crucially, the paper underscores that there is no simple fix. Traditional countermeasures—such as banning known bot accounts, relying on CAPTCHAs, or flagging obvious spam patterns—are poorly suited to a world where each manipulative account behaves in a nuanced, human‑like way. Content moderation that focuses on individual posts also struggles when faced with campaigns built around context, timing, and relationship‑building rather than blatant falsehoods.

Instead, the researchers argue that defenders will need to evolve their methods on multiple fronts at once. Detection efforts may shift from analyzing single posts or accounts to looking for coordinated patterns across thousands of agents: shared goals, synchronized shifts in narrative, or unusual clusters of interactions over time. This kind of forensic, pattern‑based analysis is more complex, but may be one of the few viable ways to identify swarms operating in a realistic, human‑like manner.

The study also raises concerns about psychological and societal impacts. When AI agents can participate credibly in everyday discussions—about politics, health, finance, or social issues—they can subtly shape what appears to be the “normal” or majority viewpoint. People may think they are observing spontaneous consensus, when in reality they are engaging with a carefully orchestrated network of artificial personas. Over time, this can erode trust in any kind of online interaction, as users struggle to distinguish between genuine peers and algorithmically generated voices.

Another risk highlighted by the authors is the “personalization” of manipulation. Current influence operations are often blunt, targeting large demographic groups with generic messages. AI swarms, however, can tailor content to individuals: their interests, fears, cultural background, and browsing habits. Autonomous agents can learn what resonates with a specific person and adjust arguments accordingly, making persuasion more effective and more invisible.

The paper suggests that, as these tools advance, the line between marketing, political campaigning, and covert manipulation will blur. The same underlying technologies that allow companies to engage customers more efficiently can be repurposed to undermine democratic debate, spread conspiracy theories, or destabilize public institutions. Regulation will therefore need to grapple not just with overt “fake news,” but with subtle, persistent nudging powered by autonomous systems.

To address the threat, the researchers advocate a mix of technical, legal, and social responses. Technically, platforms and independent labs will need to invest in advanced detection systems, including AI models specifically trained to identify coordinated behavior, synthetic personas, and large‑scale narrative shaping. These tools must evolve at roughly the same pace as offensive capabilities, implying an ongoing arms race between manipulators and defenders.

On the policy side, the paper calls for clearer rules on the deployment of autonomous agents in public information spaces. This could include mandatory labeling of AI‑generated content, restrictions on using such systems for political advertising or foreign propaganda, and requirements for platforms to report large‑scale influence operations when identified. International norms or agreements may also be necessary, given that much of this activity crosses borders by design.

Education and media literacy are presented as another crucial pillar. If AI swarms become common, users will have to adapt their mental models of online interaction—treating every discussion, trending topic, or viral story with a new level of skepticism. Training people to recognize behavioral patterns, question apparent consensus, and verify information through multiple channels may help blunt some of the impact, even when technical detection lags behind.

The researchers also note that transparency from AI developers will be vital. Companies that build and deploy powerful language and agent systems hold a central position in this emerging ecosystem. How they choose to manage access, implement safeguards, and respond to misuse will heavily influence how quickly AI swarms move from experimental capabilities to widely used tools of manipulation. Voluntary industry standards, risk assessments, and red‑teaming could all play a role in reducing the most dangerous applications.

At the same time, the paper acknowledges that defensive use of similar technologies could become part of the solution. Just as attackers may use swarms to spread misinformation, defenders might deploy AI systems to monitor public conversations for emerging campaigns, respond quickly with accurate information, and support human moderators at scale. However, this raises its own ethical questions: to what extent should automated systems participate in public discourse, even for defensive purposes?

A further complication is attribution. When autonomous agents operate continuously and adaptively, it becomes harder to trace them back to specific sponsors or states. Plausible deniability increases: a government or organization can claim ignorance, blaming “rogue actors” or independent groups. This weakens traditional mechanisms of accountability and sanctions, making it more difficult to deter hostile information operations.

The study ultimately paints a picture of an information environment entering a new phase, where the presence of AI is not confined to visible tools like chatbots but diffused throughout social platforms, comment sections, forums, and messaging apps. Influence will be exercised not by a few loud, easily recognizable actors, but by countless, coordinated, human‑like agents operating just below the threshold of obvious detection.

Despite the severity of the risks, the researchers stop short of calling for bans on autonomous AI systems. Instead, they urge policymakers, platforms, and developers to recognize the scale of the coming shift and begin preparing now. Waiting until AI swarms are fully operational and widespread, they argue, would leave societies playing a permanent game of catch‑up against more agile and adaptive adversaries.

In summary, the paper warns that the age of clumsy, easily spotted botnets is ending, replaced by sophisticated swarms of autonomous AI agents capable of long‑term, targeted manipulation of public opinion. Defending against this new reality will require a coordinated response that combines technical innovation, regulatory action, industry responsibility, and a cultural shift in how people interpret what they see and hear online.